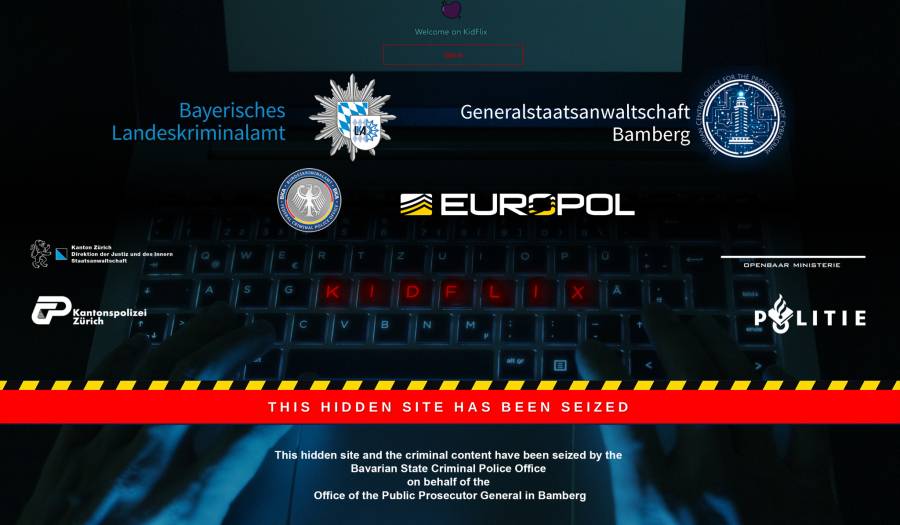

They also seized 72,000 illegal videos from the site and personal information of its users, resulting in arrests of 1,400 suspects around the world.

Wow

1,393 suspects identified 79 suspects arrested Over 3,000 electronic devices seized 39 children protected

Imagine if humans evolved enough to self-solve the problem of liking this shit.

And it didn’t even require sacrificing encryption huh!

“See we caught these guys without doing it, thank of how many more we can catch if we do! Like all the terrorists America has caught with violating their privacy. …Maybe some day they will.”

Basically the only reason I read the article is to know if they needed a “backdoor” in encryption, guess the don’t need it, like everyone with a little bit of IT knowledge always told them.

Goddam what an obvious fucking name. If you wrote a procedural cop show where the child traffickers ran a site called KidFlix, you’d be laughed out of the building for being so on-the-nose.

Depends on your taste for stories and the general atmosphere. I think in better parts of Star Wars EU this would make sense (or it wouldn’t, but the right way, same as in reality).

Here’s a reminder that you can submit photos of your hotel room to law enforcement, to assist in tracking down CSAM producers. The vast majority of sex trafficking media is produced in hotels. So being able to match furniture, bedspreads, carpet patterns, wallpaper, curtains, etc in the background to a specific hotel helps investigators narrow down when and where it was produced.

Wouldnt this be so much better if we got hoteliers on board instead of individuals

They’re only concerned with the room fees.

Don’t see, don’t tell.

Sadly.

I worked in customer service a long time. No one was trained on how to be law enforcement and no one was paid enough to be entrusted with public safety beyond the common sense everyday people have about these things. I reported every instance of child abuse I’ve seen, and that’s maybe 4 times in two decades. I have no problem with training and reporting, but you have to accept that the service staff aren’t going to police hotels.

I didn’t mean to imply all of the hotels and motels were “In On It.”

Nice to know. Thanks.

Thank you for posting this.

During the investigation, Europol’s analysts from the European Cybercrime Centre (EC3) provided intensive operational support to national authorities by analysing thousands of videos.

I don’t know how you can do this job and not get sick because looking away is not an option

You do get sick, and I would be most surprised if they didnt allow people to look away and take breaks/get support as needed.

Most emergency line operators and similar kinds of inspectors get them, so it would be odd if they did not.

Yes, my wife used to work in the ER, she still tells the same stories over and over again 15 years later, because the memories of the horrible shit she saw doesn’t go away

Indeed, but in my country the psychological support is even mandatory. Furthermore, I know there have been pilots with using ML to go through the videos. When the system detects explicit material, an officer has to confirm it. But it prevents them going through it all day every day for each video. I think Microsoft has also been working on a database with hashes that LEO provides to automatically detect materials that have already been identified. All in all, a gruesome job, but fortunately technique is alleviating the harshest activities bit by bit.

And this is for law enforcement level of personnel. Meta and friends just outsource content moderation to low-wage countries and let the poors deal with the PTSD themselves.

Let’s hope that’s what AI can help with, instead of techbrocracy

This kind of shit is why i noped out of the digital forensics field. I would have killed myself if I had to see that shit everyday.

I’m sure many of them numb themselves to it, and pretend it isn’t real in order to do the job. Then unfortunately, I’m sure some of them get addicted themselves.

Similar to undercover cops who do drugs while undercover, then get addicted to the drugs.

Kidflix sounds like a feature on Nickelodeon. The world is disgusting.

Or a Netflix for children/video editing app for primary schoolers in the early 2000s/late 1900s.

The name of it sounds like a streaming service for children’s movies and TV shows. Like, Netflix for kids. In the past 5 years I have seen at least 3 deepweb social communities that started out normally, with a lot of people talking shit and enjoying anonymous free speech. Then I log in a couple weeks or months later to find CP being posted and no mods doing anything to stop it. In all those cases, I reported the site to the FBI anonymously and erased my login from my password manager.

Massive congratulations to Europol and its partners in taking this shit down and putting these perverts away. However, they shouldn’t rest on their laurels. The objective now is to ensure that the distribution of this disgusting material is stopped outright and that no further children are harmed.

The objective now is to ensure that the distribution of this disgusting material is stopped outright and that no further children are harmed.

Sure, it’ll only cost you every bit of your privacy as governments make illegal and eliminate any means for people to communicate without the eye of Big Brother watching.

Every anti-privacy measure that governments put forward is always like "We need to be able to track your location in real time, read all of your text messages and see every picture that your phone ever takes so that we can catch the .001% of people who are child predators. Look at how scary they are!

Why are you arguing against these anti-pedophile laws?! You don’t support child sex predators do you?!"

This also helps child predators and other traffickers.

Having backdoors and means to create stalkerware and spy after people in various ways benefits those who have energy to use them and some safety. Lack of truly private communications also benefits them. The victims generally have very little means to ask for help without the criminals knowing that.

Human trafficking, sexual exploitation, drugs, all these things are high-value crime. They benefit law enforcement getting part of the pie, which means that law enforcement having better ability to surveil communications will not help against them, - the criminals will generally know what is safe and what is not for them, and they will get assistance in such services.

Surveillance helps against non-violent crime - theft, smuggling, fraud, and usually only low-value operations.

Surveillance doesn’t help against high-value crime with enough incentive to make connections in law enforcement, and money finds a way, so those connections are made and operations continue.

Giving more power to law enforcement means law enforcement trading it in relationship with organized crime. To function better, it needs more transparent, clean and accountable organization, not more power.

But all this is not important, when someone is guilt-shaming you into giving up your full right, you should just tell them to fuck off. This concerns privacy.

This also concerns guns. The reason it’s hard to find arguments in favor of gun (I mean combat arms, not handguns or hunting rifles) ownership is because successful cases for it are rare (by nature, that’s normal, you don’t need a combat rifle in your life generally, your country also doesn’t need a nuke generally, but it has one and many more), and unsuccessful (bad outcome, but proving the need for gun ownership) are more common, but hard to notice, - it’s every time you obey when you shouldn’t (by that I mean that you harm others by obeying).

Point being - no person telling you that dignity should be traded for better life has any idea. You are not a criminal for desiring and achieving privacy, you are also not a criminal for doing the same with means to defend yourself, you are also not a criminal for saying all politicians and bureaucrats of your country are shit-swimming jerks and should be fired, and even demanding it. And if someone makes a law telling you differently, that’s not a law, just someone forgot they are not holding Zeus by the beard.

Then end up USA

USA ain’t got shit on the Eastern Bloc when it comes to sex trafficking.

On average, around 3.5 new videos were uploaded to the platform every hour, many of which were previously unknown to law enforcement.

Absolutely sick and vile. I hope they honey potted the site and that the arrests keep coming.

I just got ill

Wow, with such a daring name as well. Fucking disgusting.

I once saw a list of defederated lemmy instances. In most cases, and I mean like 95% of them, the reason of thedefederation was pretty much in the instance name. CP everywhere. Humanity is a freaking mistake.

I regularly see people on Lemmy advocating for pedophilia, at LEAST every 3 months as a popular, upvoted stance. I argue with them in my history

what he fuck I’ve never ran into anyone like that.don’t even wanna know where you do this regularly

I feel like what he’s trying to say it shouldn’t be the end of the world if a kid sees a sex scene in a movie, like it should be ok for them to know it exists. But the way he phrases it is questionable at best.

When I was a kid I was forced to leave the room when any intimate scenes were in a movie and I honestly do feel like it fucked with my perception of sex a bit. Like it’s this taboo thing that should be hidden away and never discussed.

Removed by mod

I’m sorry but classifying that as advocating for pedophilia is crazy. all they said is they don’t know about studies regarding it so they said “probably” instead of making a definitive statement. you took that word and ran with it; your response is extremely over the top and hostile to someone who didn’t advocate for what you’re saying they advocate for.

It’s none of my business what you do with your time here but if I were you I’d be more cool headed about this because this is giving qanon.

Even then, a common bit you’ll hear from people actually defending pedophilia is that the damage caused is a result of how society reacts to it or the way it’s done because of the taboo against it rather than something inherent to the act itself, which would be even harder to do research on than researching pedophilia outside a criminal context already is to begin with. For starters, you’d need to find some culture that openly engaged in adult sex with children in some social context and was willing to be examined to see if the same (or different or any) damages show themselves.

And that’s before you get into the question of defining where exactly you draw the age line before it “counts” as child sexual abuse, which doesn’t have a single, coherent answer. The US alone has at least three different answers to how old someone has to be before having sex with them is not illegal based on their age alone (16-18, with 16 being most common), with many having exceptions that go lower (one if the partners are close “enough” in age are pretty common). For example in my state, the age of consent is 16 with an exception if the parties are less than 4 years difference in age. For California in comparison if two 17 year olds have sex they’ve both committed a misdemeanor unless they are married.

none of this applies to the comment they cited as an example of defending pedophilia.

They literally investigated specific time frames of their voyeurism kink in medieval times extensively, wrote several paragraphs in favor of having children watch adults have sex, but couldn’t be bothered to do the most basic of research that sex abuse is harmful to children.

“they knew some things and didn’t know some things” isn’t worth getting so worked up over. they knew the mere concept of sex being taboo negatively affected them and didn’t want to make definitive statements about things they didn’t research. believe it or not lemmy comments are not dissertations and most people just talk and don’t bother researching every tangential topic just to make a point they want to make.

Gratefully, I’ve not experienced this.

And I don’t want to know where they are.

gross, probably the reason they got banned from reddit for the same thing, promoting or soliciting csam material.

I don’t really think Reddit minds that, actually, given that u/spez was the lead mod of r/jailbait until.he got caught and hid who the mods were

Isnit not encouraging that it is ostracised and removed from normal people. There are horrible parts of everything in nature, life is good despite those people and because of the rest combatting their shittiness

You’re just seeing “survivor’s bias” (as nasty as that sounds in this case) not a general representation.

No, there really are a lot of pedophiles on Lemmy

There are a lot of pedophiles everywhere.

Unfortunately, the really rich ones get away with it for a long time, and few get away with it forever.

epstein files is never going to be fully released, too many rich and powerful people

Also Epstein got a lot of cover from non-criminal association with some rich and powerful people. Not everyone who rode on his plane was a nonce.

On the other hand, Trump was very closely associated with Epstein for an extended period. That’s not the same as someone glitzing up Epstein’s guest list in support of a charity fundraiser.

idk im at like 7 months an the only time i was able to ctrl f “pedo” in your history was when you were talking about trump (which, fair) and then again about 4chan pedophiles (which, again).

Ive been on lemmy for years (even when .ml was the only instance) and hadn’t seen anything of the sort though I don’t go digging for it either. I doubt that Lemmy is any worse than Facebook or Telegram when it comes to this.

From a month ago: https://lemmy.world/post/26165823/15375845

yeah that’s on me I only searched “pedo”

as said before, that person was not advocating for anything. he made a qualified statement, which you answered to with examples of kids in cults and flipped out calling him all kinds of nasty things.

Lmfao as I stated, they said that physical sexual abuse “PROBABLY” harms kids but they have only done research into their voyeurism kink as it applies to children.

Go off defending pedos, though 👏

Leak the subscribers’ details.

1.8m users, how the hell did they ran that website for 3 years?

That’s unfortunately (not really sure) probably the fault of Germanys approach to that. It is usually not taking these websites down but try to find the guys behind it and seize them. The argument is: they will just use a backup and start a “KidFlix 2” or sth like that. Some investigations show, that this is not the case and deleting is very effective. Also the German approach completely ignores the victim side. They have to deal with old men masturbating to them getting raped online. Very disturbing…

I think you are mixing here two different aspects of this and of similar past cases. I the past there was often a problem with takedowns of such sites, because german prosecutors did not regard themselves as being in charge of takedowns, if the servers were somewhere overseas. Their main focus was to get the admins and users of those sites and to get them into jail.

In this specific case they were observing this platform (together with prosecutors from other countries in an orchestrated operation) to gather as much data as possible about the structure, the payment flows, the admins and the users of this before moving into action and getting them arrested. The site was taken down meanwhile.

If you blow up and delete)such a darknet service immediately upon discovery, you may get rid of it (temporarily) but you might not catch many of the people behind it.

This feels like one of those things where couch critics aren’t qualified. There’s a pretty strong history of three letter agencies using this strategy successfully in other organized crime industries.

Like I stated earlier, someone was caught red-handed, and snitched to get a lesser sentence.

Honestly, if the existing victims have to deal with a few more people masturbating to the existing video material and in exchange it leads to fewer future victims it might be worth the trade-off but it is certainly not an easy choice to make.

It doesn’t though.

The most effective way to shut these forums down is to register bot accounts scraping links to the clearnet direct-download sites hosting the material and then reporting every single one.

If everything posted to these forums is deleted within a couple of days, their popularity would falter. And victims much prefer having their footage deleted than letting it stay up for years to catch a handful of site admins.

Frankly, I couldn’t care less about punishing the people hosting these sites. It’s an endless game of cat and mouse and will never be fast enough to meaningfully slow down the spread of CSAM.

Also, these sites don’t produce CSAM themselves. They just spread it - most of the CSAM exists already and isn’t made specifically for distribution.

Who said anything about punishing the people hosting the sites. I was talking about punishing the people uploading and producing the content. The ones doing the part that is orders of magnitude worse than anything else about this.

I’d be surprised if many “producers” are caught. From what I have heard, most uploads on those sites are reuploads because it’s magnitudes easier.

Of the 1400 people caught, I’d say maybe 10 were site administors and the rest passive “consumers” who didn’t use Tor. I wouldn’t put my hopes up too much that anyone who was caught ever committed child abuse themselves.

I mean, 1400 identified out of 1.8 million really isn’t a whole lot to begin with.

If most are reuploads anyway that kills the whole argument that deleting things works though.

Not quite. Reuploading is at the very least an annoying process.

Uploading anything over Tor is a gruelling process. Downloading takes much time already, uploading even more so. Most consumer internet plans aren’t symmetrically either with significantly lower upload than download speeds. Plus, you need to find a direct-download provider which doesn’t block Tor exit nodes and where uploading/downloading is free.

Taking something down is quick. A script scraping these forums which automatically reports the download links (any direct-download site quickly removes reports of CSAM by the way - no one wants to host this legal nightmare) can take down thousands of uploads per day.

Making the experience horrible leads to a slow death of those sites. Imagine if 95% of videos on [generic legal porn site] lead to a “Sorry! This content has been taken down.” message. How much traffic would the site lose? I’d argue quite a lot.

Well, some pedophiles have argued that AI generated child porn should be allowed, so real humans are not harmed, and exploited.

I’m conflicted on that. Naturally, I’m disgusted, and repulsed. I AM NOT ADVOCATING IT.

But if no real child is harmed…

I don’t want to think about it, anymore.

Issue is, AI is often trained on real children, sometimes even real CSAM(allegedly), which makes the “no real children were harmed” part not necessarily 100% true.

Also since AI can generate photorealistic imagery, it also muddies the water for the real thing.

I didn’t think about that.

The whole issue is abominable, and odious.

that is still cp, and distributing CP still harms childrens, eventually they want to move on to the real thing, as porn is not satisfying them anymore.

eventually they want to move on to the real thing, as porn is not satisfying them anymore.

Isn’t this basically the same argument as arguing violent media creates killers?

Exactly.

Understand you’re not advocating for it, but I do take issue with the idea that AI CSAM will prevent children from being harmed. While it might satisfy some of them (at first, until the high from that wears off and they need progressively harder stuff), a lot of pedophiles are just straight up sadistic fucks and a real child being hurt is what gets them off. I think it’ll just make the “real” stuff even more valuable in their eyes.

I feel the same way. I’ve seen the argument that it’s analogous to violence in videogames, but it’s pretty disingenuous since people typically play videogames to have fun and for escapism, whereas with CSAM the person seeking it out is doing so in bad faith. A more apt comparison would be people who go out of their way to hurt animals.

A more apt comparison would be people who go out of their way to hurt animals.

Is it? That person is going out of their way to do actual violence. It feels like arguing someone watching a slasher movie is more likely to make them go commit murder is a much closer analogy to someone watching a cartoon of a child engaged in sexual activity or w/e being more likely to make them molest a real kid.

We could make it a video game about molesting kids and Postal or Hatred as our points of comparison if it would help. I’m sure someone somewhere has made such a game, and I’m absolutely sure you’d consider COD for “fun and escapism” and someone playing that sort of game is doing so “in bad faith” despite both playing a simulation of something that is definitely illegal and the core of the argument being that one causes the person to want to the illegal thing more and the other does not.

Somehow I doubt allowing it actually meaningfully helps the situation. It sounds like an alcoholic arguing that a glass of wine actually helps them not drink.

I agree.

There’s no helping actual pedophiles. That’s who they are.

That would be acceptable, but that’s unfortunately not what’s happening. Like I said: due to the majority of the files being hosted on file sharing platforms of the normal web, it’s way more effective to let them be deleted by those platforms. Some investigative journalist tried this, and documented the frustration among the users of child porn websites, to reload all the GBs/TBs of material, so that they rather quitted and shut down their sites, than going the extra mile

I used to work in netsec and unfortunately government still sucks at hiring security experts everywhere.

That being said hiring here is extremely hard - you need to find someone with below market salary expectation working on such ugly subject. Very few people can do that. I do believe money fixes this though. Just pay people more and I’m sure every European citizen wouldn’t mind 0.1% tax increase for a more effective investigation force.

Discovery of this kind of thing is as old as civilization.

Someone runs their mouth, or you catch someone with incrimination evidence on them. Then you lean on them to tell you where to go.

Most cases of “we can’t find anyone good for this job” can be solved with better pay. Make your opening more attractive, then you’ll get more applicants and can afford to be picky.

Getting the money is a different question, unless you’re willing to touch the sacred corporate profits…

they probably make double/triple in the private sector, i doubt govt can match that salary. fb EVEN probalby paid more, before they starte dusing AI to sniff out cp.

I’m a senior dev and tbh I’d take a lower salary given the right cause tho having to work with this sort of material is probably the main bottle neck here. I can’t imagine how people working this can even fall asleep.

They have to deal with old men masturbating to them getting raped online.

The moment it was posted to wherever they were going to have to deal with that forever. It’s not like they can ever know for certain that every copy of it ever made has been deleted.

And yet there are cases like Kim Dotcom, Snowden, Manning, Assange…

it says “this hidden site”, meaning it was a site on the dark web. It probably took them awhile to figure out were the site was located so they could shut it down.

it says “this hidden site”, meaning it was a site on the dark web.

Not just on the dark web (which technically is anything not indexed by search engines) but hidden sites are specifically a TOR thing (though Freenet/Hyphanet has something similar but it’s called something else). Usually a TOR hidden site has a URL that ends in .onion and the TOR protocol has a structure for routing .onion addresses.

Bribes, most likely. Until the wrong people (the Right People) became aware of it.

1.8 million users and they only caught 1000?

I imagine it’s easier to catch uploaders than viewers.

It’s also probably more impactful to go for the big “power producers” simultaneously and quickly before word gets out and people start locking things down.

It also likely gives you the best $ spent/children protected rate, because you know the producers have children they are abusing which may or may not be the case for a viewer.

Hopefully, they went after the pimps and financiers.

Yeah, I don’t suspect they went after any viewers, only uploaders.

79

79 arrested, but it seems they found the identity of a thousand or so.

For now.

Geez, two million? Good riddance. Great job everyone!

Happy cake day!

Thank you! 😃

Good fucking riddance